-

Medvedev arrives in Indian Wells after being stranded in Dubai

Medvedev arrives in Indian Wells after being stranded in Dubai

-

Trump fires homeland security chief Kristi Noem

-

Mideast war risks pulling more in as conflict boils over

Mideast war risks pulling more in as conflict boils over

-

Wales' James Botham 'sledged' by grandfather Ian Botham after Six Nations error

-

India hero Samson eyes 'one more' big knock in T20 World Cup final

India hero Samson eyes 'one more' big knock in T20 World Cup final

-

Britney Spears detained on suspicion of driving while intoxicated

-

Grooming makes Crufts debut as UK dog show widens offer

Grooming makes Crufts debut as UK dog show widens offer

-

Townsend insists Scots' focus solely on France not Six Nations title race

-

UK sends more fighter jets to Gulf: PM

UK sends more fighter jets to Gulf: PM

-

EU to ban plant-based 'bacon' but veggie 'burgers' survive chop

-

Leagues Cup to hold matches in Mexico for first time

Leagues Cup to hold matches in Mexico for first time

-

India reach T20 World Cup final after England fail in epic chase

-

Conservative Anglicans press opposition to Church's first woman leader

Conservative Anglicans press opposition to Church's first woman leader

-

Iran players sing anthem and salute at Women's Asian Cup

-

India beat England in high-scoring T20 World Cup semi-final

India beat England in high-scoring T20 World Cup semi-final

-

Mideast war traps 20,000 seafarers, 15,000 cruise passengers in Gulf

-

Italy bring back Brex to face England

Italy bring back Brex to face England

-

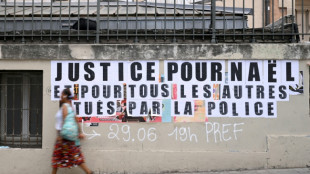

French policeman to be tried over 2023 killing of teen

-

Oil prices rise, stocks slide as Middle East war stirs supply concerns

Oil prices rise, stocks slide as Middle East war stirs supply concerns

-

More flights take off despite continued fighting in Middle East

-

Ukraine, Russia free 200 POWs each

Ukraine, Russia free 200 POWs each

-

Middle East war halts work at WHO's Dubai emergency hub

-

Paramount's Ellison vows CNN editorial independence

Paramount's Ellison vows CNN editorial independence

-

US says attacks on alleged drug boats have spooked traffickers

-

Dempsey returns as Scotland shuffle pack for Six Nations clash against France

Dempsey returns as Scotland shuffle pack for Six Nations clash against France

-

India pile up 253-7 against England in T20 World Cup semi-final

-

Wary Europeans pledge 'defensive' military aid in Mideast war

Wary Europeans pledge 'defensive' military aid in Mideast war

-

Seven countries to boycott Paralympics ceremony over Russia: organisers

-

UK's Crufts dog show opens with growing global appeal

UK's Crufts dog show opens with growing global appeal

-

PSG prepare for Chelsea clash with Monaco rematch

-

Google opens AI centre as Berlin defends US tech reliance

Google opens AI centre as Berlin defends US tech reliance

-

Second Iranian ship nears Sri Lanka after submarine attack

-

Portugal mourns acclaimed writer Antonio Lobo Antunes

Portugal mourns acclaimed writer Antonio Lobo Antunes

-

Union loses fight against Tesla at German factory

-

Wales revel in being the underdogs, says skipper Lake

Wales revel in being the underdogs, says skipper Lake

-

German school students rally against army recruitment drive

-

Wary European states pledge military aid for Cyprus, Gulf

Wary European states pledge military aid for Cyprus, Gulf

-

Liverpool injuries frustrating Slot in tough season

-

Real Madrid will 'keep fighting' in title race, vows Arbeloa

Real Madrid will 'keep fighting' in title race, vows Arbeloa

-

Australia join South Korea in quarters of Women's Asian Cup

-

Kane to miss Bayern game against Gladbach with calf knock

Kane to miss Bayern game against Gladbach with calf knock

-

Henman says Raducanu needs more physicality to rise up rankings

-

France recall fit-again Jalibert to face Scotland

France recall fit-again Jalibert to face Scotland

-

Harry Styles fans head in one direction: to star's home village

-

Syrian jailed over stabbing at Berlin Holocaust memorial

Syrian jailed over stabbing at Berlin Holocaust memorial

-

Second Iranian ship heading to Sri Lanka after submarine attack

-

Middle East war spirals as Iran hits Kurds in Iraq

Middle East war spirals as Iran hits Kurds in Iraq

-

Norris hungrier than ever to defend Formula One world title

-

Fatherhood, sleep, T20 World Cup final: Henry's whirlwind journey

Fatherhood, sleep, T20 World Cup final: Henry's whirlwind journey

-

Conservative Nigerian city sees women drive rickshaw taxis

Generative AI's most prominent skeptic doubles down

Two and a half years since ChatGPT rocked the world, scientist and writer Gary Marcus still remains generative artificial intelligence's great skeptic, playing a counter-narrative to Silicon Valley's AI true believers.

Marcus became a prominent figure of the AI revolution in 2023, when he sat beside OpenAI chief Sam Altman at a Senate hearing in Washington as both men urged politicians to take the technology seriously and consider regulation.

Much has changed since then. Altman has abandoned his calls for caution, instead teaming up with Japan's SoftBank and funds in the Middle East to propel his company to sky-high valuations as he tries to make ChatGPT the next era-defining tech behemoth.

"Sam's not getting money anymore from the Silicon Valley establishment," and his seeking funding from abroad is a sign of "desperation," Marcus told AFP on the sidelines of the Web Summit in Vancouver, Canada.

Marcus's criticism centers on a fundamental belief: generative AI, the predictive technology that churns out seemingly human-level content, is simply too flawed to be transformative.

The large language models (LLMs) that power these capabilities are inherently broken, he argues, and will never deliver on Silicon Valley's grand promises.

"I'm skeptical of AI as it is currently practiced," he said. "I think AI could have tremendous value, but LLMs are not the way there. And I think the companies running it are not mostly the best people in the world."

His skepticism stands in stark contrast to the prevailing mood at the Web Summit, where most conversations among 15,000 attendees focused on generative AI's seemingly infinite promise.

Many believe humanity stands on the cusp of achieving super intelligence or artificial general intelligence (AGI) technology that could match and even surpass human capability.

That optimism has driven OpenAI's valuation to $300 billion, unprecedented levels for a startup, with billionaire Elon Musk's xAI racing to keep pace.

Yet for all the hype, the practical gains remain limited.

The technology excels mainly at coding assistance for programmers and text generation for office work. AI-created images, while often entertaining, serve primarily as memes or deepfakes, offering little obvious benefit to society or business.

Marcus, a longtime New York University professor, champions a fundamentally different approach to building AI -- one he believes might actually achieve human-level intelligence in ways that current generative AI never will.

"One consequence of going all-in on LLMs is that any alternative approach that might be better gets starved out," he explained.

This tunnel vision will "cause a delay in getting to AI that can help us beyond just coding -- a waste of resources."

- 'Right answers matter' -

Instead, Marcus advocates for neurosymbolic AI, an approach that attempts to rebuild human logic artificially rather than simply training computer models on vast datasets, as is done with ChatGPT and similar products like Google's Gemini or Anthropic's Claude.

He dismisses fears that generative AI will eliminate white-collar jobs, citing a simple reality: "There are too many white-collar jobs where getting the right answer actually matters."

This points to AI's most persistent problem: hallucinations, the technology's well-documented tendency to produce confident-sounding mistakes.

Even AI's strongest advocates acknowledge this flaw may be impossible to eliminate.

Marcus recalls a telling exchange from 2023 with LinkedIn founder Reid Hoffman, a Silicon Valley heavyweight: "He bet me any amount of money that hallucinations would go away in three months. I offered him $100,000 and he wouldn't take the bet."

Looking ahead, Marcus warns of a darker consequence once investors realize generative AI's limitations. Companies like OpenAI will inevitably monetize their most valuable asset: user data.

"The people who put in all this money will want their returns, and I think that's leading them toward surveillance," he said, pointing to Orwellian risks for society.

"They have all this private data, so they can sell that as a consolation prize."

Marcus acknowledges that generative AI will find useful applications in areas where occasional errors don't matter much.

"They're very useful for auto-complete on steroids: coding, brainstorming, and stuff like that," he said.

"But nobody's going to make much money off it because they're expensive to run, and everybody has the same product."

E.Burkhard--VB