-

Chile police arrest suspect over deadly wildfires

Chile police arrest suspect over deadly wildfires

-

Djokovic eases into Melbourne third round - with help from a tree

-

Keys draws on champion mindset to make Australian Open third round

Keys draws on champion mindset to make Australian Open third round

-

Knicks halt losing streak with record 120-66 thrashing of Nets

-

Philippine President Marcos hit with impeachment complaint

Philippine President Marcos hit with impeachment complaint

-

Trump to unveil 'Board of Peace' at Davos after Greenland backtrack

-

Bitter-sweet as Pegula crushes doubles partner at Australian Open

Bitter-sweet as Pegula crushes doubles partner at Australian Open

-

Hong Kong starts security trial of Tiananmen vigil organisers

-

Keys into Melbourne third round with Sinner, Djokovic primed

Keys into Melbourne third round with Sinner, Djokovic primed

-

Bangladesh launches campaigns for first post-Hasina polls

-

Stocks track Wall St rally as Trump cools tariff threats in Davos

Stocks track Wall St rally as Trump cools tariff threats in Davos

-

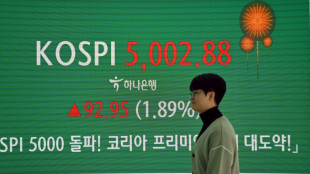

South Korea's economy grew just 1% in 2025, lowest in five years

-

Snowboard champ Hirano suffers fractures ahead of Olympics

Snowboard champ Hirano suffers fractures ahead of Olympics

-

'They poisoned us': grappling with deadly impact of nuclear testing

-

Keys blows hot and cold before making Australian Open third round

Keys blows hot and cold before making Australian Open third round

-

Philippine journalist found guilty of terror financing

-

Greenlanders doubtful over Trump resolution

Greenlanders doubtful over Trump resolution

-

Real Madrid top football rich list as Liverpool surge

-

'One Battle After Another,' 'Sinners' tipped to top Oscar noms

'One Battle After Another,' 'Sinners' tipped to top Oscar noms

-

Higher heating costs add to US affordability crunch

-

Eight stadiums to host 2027 Rugby World Cup matches in Australia

Eight stadiums to host 2027 Rugby World Cup matches in Australia

-

Plastics everywhere, and the myth that made it possible

-

Interim Venezuela leader to visit US

Interim Venezuela leader to visit US

-

Australia holds day of mourning for Bondi Beach shooting victims

-

Liverpool cruise as Bayern reach Champions League last 16

Liverpool cruise as Bayern reach Champions League last 16

-

Fermin Lopez brace leads Barca to win at Slavia Prague

-

Newcastle pounce on PSV errors to boost Champions League last-16 bid

Newcastle pounce on PSV errors to boost Champions League last-16 bid

-

Fermin Lopez brace hands Barca win at Slavia Prague

-

Kane double fires Bayern into Champions League last 16

Kane double fires Bayern into Champions League last 16

-

Newcastle pounce on PSV errors to close in on Champions League last 16

-

In Davos speech, Trump repeatedly refers to Greenland as 'Iceland'

In Davos speech, Trump repeatedly refers to Greenland as 'Iceland'

-

Liverpool see off Marseille to close on Champions League last 16

-

Caicedo strikes late as Chelsea end Pafos resistance

Caicedo strikes late as Chelsea end Pafos resistance

-

US Republicans begin push to hold Clintons in contempt over Epstein

-

Trump says agreed 'framework' for US deal over Greenland

Trump says agreed 'framework' for US deal over Greenland

-

Algeria's Zidane and Belghali banned over Nigeria AFCON scuffle

-

Iran says 3,117 killed during protests, activists fear 'far higher' toll

Iran says 3,117 killed during protests, activists fear 'far higher' toll

-

Atletico frustrated in Champions League draw at Galatasaray

-

Israel says struck Syria-Lebanon border crossings used by Hezbollah

Israel says struck Syria-Lebanon border crossings used by Hezbollah

-

Snapchat settles to avoid social media addiction trial

-

'Extreme cold': Winter storm forecast to slam huge expanse of US

'Extreme cold': Winter storm forecast to slam huge expanse of US

-

Jonathan Anderson reimagines aristocrats in second Dior Homme collection

-

Former England rugby captain George to retire in 2027

Former England rugby captain George to retire in 2027

-

Israel launches wave of fresh strikes on Lebanon

-

Ubisoft unveils details of big restructuring bet

Ubisoft unveils details of big restructuring bet

-

Abhishek fireworks help India beat New Zealand in T20 opener

-

Huge lines, laughs and gasps as Trump lectures Davos elite

Huge lines, laughs and gasps as Trump lectures Davos elite

-

Trump rules out 'force' against Greenland but demands talks

-

Stocks steadier as Trump rules out force to take Greenland

Stocks steadier as Trump rules out force to take Greenland

-

World's oldest cave art discovered in Indonesia

Facebook's algorithm doesn't alter people's beliefs: research

Do social media echo chambers deepen political polarization, or simply reflect existing social divisions?

A landmark research project that investigated Facebook around the 2020 US presidential election published its first results Thursday, finding that, contrary to assumption, the platform's often criticized content-ranking algorithm doesn't shape users' beliefs.

The work is the product of a collaboration between Meta -- the parent company of Facebook and Instagram -- and a group of academics from US universities who were given broad access to internal company data, and signed up tens of thousands of users for experiments.

The academic team wrote four papers examining the role of the social media giant in American democracy, which were published in the scientific journals Science and Nature.

Overall, the algorithm was found to be "extremely influential in people's on-platform experiences," said project leaders Talia Stroud of the University of Texas at Austin and Joshua Tucker, of New York University.

In other words, it heavily impacted what the users saw, and how much they used the platforms.

"But we also know that changing the algorithm for even a few months isn't likely to change people's political attitudes," they said, as measured by users' answers on surveys after they took part in three-month-long experiments that altered how they received content.

The authors acknowledged this conclusion might be because the changes weren't in place for long enough to make an impact, given that the United States has been growing more polarized for decades.

Nevertheless, "these findings challenge popular narratives blaming social media echo chambers for the problems of contemporary American democracy," wrote the authors of one of the papers, published in Nature.

- 'No silver bullet' -

Facebook's algorithm, which uses machine-learning to decide which posts rise to the top of users' feeds based on their interests, has been accused of giving rise to "filter bubbles" and enabling the spread of misinformation.

Researchers recruited around 40,000 volunteers via invitations placed on their Facebook and Instagram feeds, and designed an experiment where one group was exposed to the normal algorithm, while the other saw posts listed from newest to oldest.

Facebook originally used a reverse chronological system and some observers have suggested that switching back to it will reduce social media's harmful effects.

The team found that users in the chronological feed group spent around half the amount of time on Facebook and Instagram compared to the algorithm group.

On Facebook, those in the chronological group saw more content from moderate friends, as well as more sources with ideologically mixed audiences.

But the chronological feed also increased the amount of political and untrustworthy content seen by users.

Despite the differences, the changes did not cause detectable changes in measured political attitudes.

"The findings suggest that chronological feed is no silver bullet for issues such as political polarization," said coauthor Jennifer Pan of Stanford.

- Meta welcomes findings -

In a second paper in Science, the same team researched the impact of reshared content, which constitutes more than a quarter of content that Facebook users see.

Suppressing reshares has been suggested as a means to control harmful viral content.

The team ran a controlled experiment in which a group of Facebook users saw no changes to their feeds, while another group had reshared content removed.

Removing reshares reduced the proportion of political content seen, resulting in reduced political knowledge -- but again did not impact downstream political attitudes or behaviors.

A third paper, in Nature, probed the impact of content from "like-minded" users, pages, and groups in their feeds, which the researchers found constituted a majority of what the entire population of active adult Facebook users see in the US.

But in an experiment involving over 23,000 Facebook users, suppressing like-minded content once more had no impact on ideological extremity or belief in false claims.

A fourth paper, in Science, did however confirm extreme "ideological segregation" on Facebook, with politically conservative users more siloed in their news sources than liberals.

What's more, 97 percent of political news URLs on Facebook rated as false by Meta's third-party fact checking program -- which AFP is part of -- were seen by more conservatives than liberals.

Meta welcomed the overall findings.

They "add to a growing body of research showing there is little evidence that social media causes harmful... polarization or has any meaningful impact on key political attitudes, beliefs or behaviors," said Nick Clegg, the company's president of global affairs.

E.Schubert--BTB