-

Middle East war enters seventh day as Israel strikes Beirut

Middle East war enters seventh day as Israel strikes Beirut

-

Qualifier Parry ends Venus's desert dream

-

Iran missile barrage sparks explosions over Tel Aviv

Iran missile barrage sparks explosions over Tel Aviv

-

US says Venezuela to protect mining firms as diplomatic ties restored

-

Trump honors Messi and MLS Cup champion Miami teammates

Trump honors Messi and MLS Cup champion Miami teammates

-

Dismal Spurs can still avoid relegation vows Tudor

-

Berger sets early pace at Arnold Palmer with 'unbelievable' 63

Berger sets early pace at Arnold Palmer with 'unbelievable' 63

-

Morocco part company with coach Regragui as World Cup looms

-

Lens beat Lyon on penalties to reach French Cup semis

Lens beat Lyon on penalties to reach French Cup semis

-

El Salvador's Bukele holding dozens of political prisoners: rights group

-

With Iran war, US goes it alone like never before

With Iran war, US goes it alone like never before

-

Spurs slip deeper into relegation trouble after loss to Palace

-

European, US stocks back in sell-off mode as oil prices surge

European, US stocks back in sell-off mode as oil prices surge

-

Pete Hegseth: Trump's Iran war attack dog

-

Celtics' Tatum could make injury return on Friday

Celtics' Tatum could make injury return on Friday

-

'Enemy at home': Iranian authorities tighten grip as war rages

-

Bethell set for 'hell of a career', says England captain Brook

Bethell set for 'hell of a career', says England captain Brook

-

France coach Galthie slams Scotland for 'smallest changing room in the world'

-

Medvedev arrives in Indian Wells after being stranded in Dubai

Medvedev arrives in Indian Wells after being stranded in Dubai

-

Trump fires homeland security chief Kristi Noem

-

Mideast war risks pulling more in as conflict boils over

Mideast war risks pulling more in as conflict boils over

-

Wales' James Botham 'sledged' by grandfather Ian Botham after Six Nations error

-

India hero Samson eyes 'one more' big knock in T20 World Cup final

India hero Samson eyes 'one more' big knock in T20 World Cup final

-

Britney Spears detained on suspicion of driving while intoxicated

-

Grooming makes Crufts debut as UK dog show widens offer

Grooming makes Crufts debut as UK dog show widens offer

-

Townsend insists Scots' focus solely on France not Six Nations title race

-

UK sends more fighter jets to Gulf: PM

UK sends more fighter jets to Gulf: PM

-

EU to ban plant-based 'bacon' but veggie 'burgers' survive chop

-

Leagues Cup to hold matches in Mexico for first time

Leagues Cup to hold matches in Mexico for first time

-

India reach T20 World Cup final after England fail in epic chase

-

Conservative Anglicans press opposition to Church's first woman leader

Conservative Anglicans press opposition to Church's first woman leader

-

Iran players sing anthem and salute at Women's Asian Cup

-

India beat England in high-scoring T20 World Cup semi-final

India beat England in high-scoring T20 World Cup semi-final

-

Mideast war traps 20,000 seafarers, 15,000 cruise passengers in Gulf

-

Italy bring back Brex to face England

Italy bring back Brex to face England

-

French policeman to be tried over 2023 killing of teen

-

Oil prices rise, stocks slide as Middle East war stirs supply concerns

Oil prices rise, stocks slide as Middle East war stirs supply concerns

-

More flights take off despite continued fighting in Middle East

-

Ukraine, Russia free 200 POWs each

Ukraine, Russia free 200 POWs each

-

Middle East war halts work at WHO's Dubai emergency hub

-

Paramount's Ellison vows CNN editorial independence

Paramount's Ellison vows CNN editorial independence

-

US says attacks on alleged drug boats have spooked traffickers

-

Dempsey returns as Scotland shuffle pack for Six Nations clash against France

Dempsey returns as Scotland shuffle pack for Six Nations clash against France

-

India pile up 253-7 against England in T20 World Cup semi-final

-

Wary Europeans pledge 'defensive' military aid in Mideast war

Wary Europeans pledge 'defensive' military aid in Mideast war

-

Seven countries to boycott Paralympics ceremony over Russia: organisers

-

UK's Crufts dog show opens with growing global appeal

UK's Crufts dog show opens with growing global appeal

-

PSG prepare for Chelsea clash with Monaco rematch

-

Google opens AI centre as Berlin defends US tech reliance

Google opens AI centre as Berlin defends US tech reliance

-

Second Iranian ship nears Sri Lanka after submarine attack

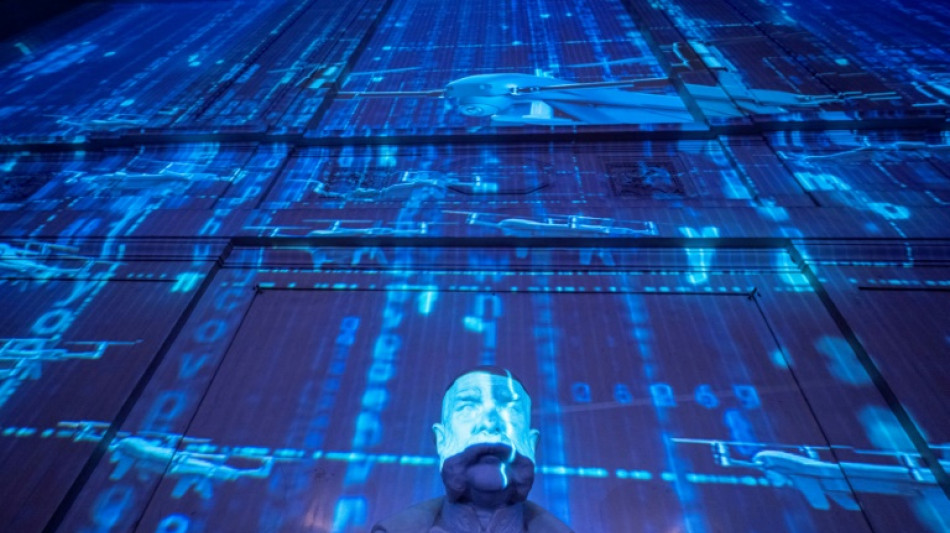

Firms and researchers at odds over superhuman AI

Hype is growing from leaders of major AI companies that "strong" computer intelligence will imminently outstrip humans, but many researchers in the field see the claims as marketing spin.

The belief that human-or-better intelligence -- often called "artificial general intelligence" (AGI) -- will emerge from current machine-learning techniques fuels hypotheses for the future ranging from machine-delivered hyperabundance to human extinction.

"Systems that start to point to AGI are coming into view," OpenAI chief Sam Altman wrote in a blog post last month. Anthropic's Dario Amodei has said the milestone "could come as early as 2026".

Such predictions help justify the hundreds of billions of dollars being poured into computing hardware and the energy supplies to run it.

Others, though are more sceptical.

Meta's chief AI scientist Yann LeCun told AFP last month that "we are not going to get to human-level AI by just scaling up LLMs" -- the large language models behind current systems like ChatGPT or Claude.

LeCun's view appears backed by a majority of academics in the field.

Over three-quarters of respondents to a recent survey by the US-based Association for the Advancement of Artificial Intelligence (AAAI) agreed that "scaling up current approaches" was unlikely to produce AGI.

- 'Genie out of the bottle' -

Some academics believe that many of the companies' claims, which bosses have at times flanked with warnings about AGI's dangers for mankind, are a strategy to capture attention.

Businesses have "made these big investments, and they have to pay off," said Kristian Kersting, a leading researcher at the Technical University of Darmstadt in Germany and AAAI member.

"They just say, 'this is so dangerous that only I can operate it, in fact I myself am afraid but we've already let the genie out of the bottle, so I'm going to sacrifice myself on your behalf -- but then you're dependent on me'."

Scepticism among academic researchers is not total, with prominent figures like Nobel-winning physicist Geoffrey Hinton or 2018 Turing Prize winner Yoshua Bengio warning about dangers from powerful AI.

"It's a bit like Goethe's 'The Sorcerer's Apprentice', you have something you suddenly can't control any more," Kersting said -- referring to a poem in which a would-be sorcerer loses control of a broom he has enchanted to do his chores.

A similar, more recent thought experiment is the "paperclip maximiser".

This imagined AI would pursue its goal of making paperclips so single-mindedly that it would turn Earth and ultimately all matter in the universe into paperclips or paperclip-making machines -- having first got rid of human beings that it judged might hinder its progress by switching it off.

While not "evil" as such, the maximiser would fall fatally short on what thinkers in the field call "alignment" of AI with human objectives and values.

Kersting said he "can understand" such fears -- while suggesting that "human intelligence, its diversity and quality is so outstanding that it will take a long time, if ever" for computers to match it.

He is far more concerned with near-term harms from already-existing AI, such as discrimination in cases where it interacts with humans.

- 'Biggest thing ever' -

The apparently stark gulf in outlook between academics and AI industry leaders may simply reflect people's attitudes as they pick a career path, suggested Sean O hEigeartaigh, director of the AI: Futures and Responsibility programme at Britain's Cambridge University.

"If you are very optimistic about how powerful the present techniques are, you're probably more likely to go and work at one of the companies that's putting a lot of resource into trying to make it happen," he said.

Even if Altman and Amodei may be "quite optimistic" about rapid timescales and AGI emerges much later, "we should be thinking about this and taking it seriously, because it would be the biggest thing that would ever happen," O hEigeartaigh added.

"If it were anything else... a chance that aliens would arrive by 2030 or that there'd be another giant pandemic or something, we'd put some time into planning for it".

The challenge can lie in communicating these ideas to politicians and the public.

Talk of super-AI "does instantly create this sort of immune reaction... it sounds like science fiction," O hEigeartaigh said.

C.Koch--VB